Case Study: Google Compute and Google Services For Core Banking Systems

Updated 25 Apr 2023

7 Min

3985 Views

We had a task to create a system of payment transferring between core banking systems, it was kind of a layer between services and banks. To execute an operation, the user requires to the service, which refers to our server, and at the same time, our server sends a request to the bank server, for example, to see a balance, or for the money transfer. Banks give their API's and operating instructions. For example, one of the banks has used mt 940 and mt 942 SWIFT standards, but it doesn't affect the logic of other banks as the system had a component structure. It means that the system was designed so that you can safely implement other banks for provision of services. To complete the task, we've chosen Google Compute because it provides many useful services, and also it is cheaper than Amazon EC2.

See also: how to build a banking app

In regard to security, we've carried out 'risk assessment' using nmap. When there were multiple incorrect queries in a row, the service was blocked for some time. Also, all Google Compute's ports are closed, except for a few ones. They can be opened in the following way:

Go to cloud.google.com

- Go to my Console2. Choose your Project.3. Choose 'Compute Engine'4. Choose 'Networks'5. Choose the interface for you Instances.6. In firewalls section, Go to Create New.7. Create your rule, in this case, 'Protocols & Ports' would be 'tcp: 9090' I guess.8. Save.

The access to the database from outside is prohibited (meaning access from external IPs), but you can add a list of allowed ones.

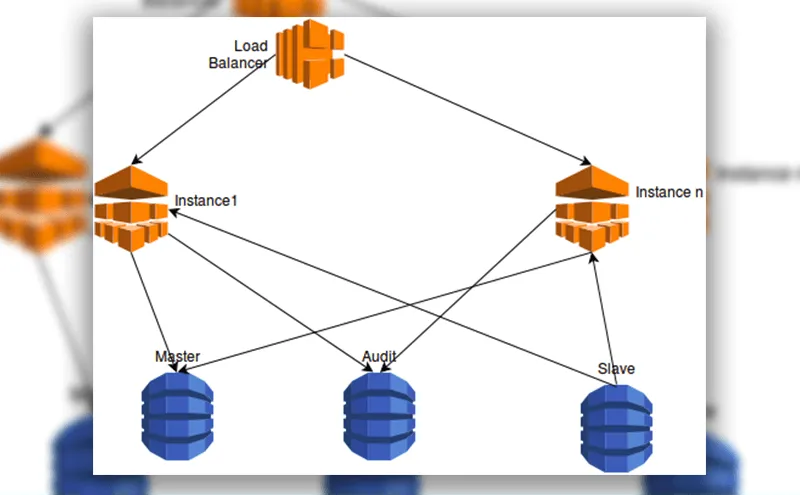

You can choose the following structure for the project:

What is Google Compute?

It is an IaaS-service based on the Linux OS. It is designed for launching virtual servers in the cloud, which provides services by hourly fee for the resource consumption (data storage and computing landscape). Google Compute was created as a competitor to Amazon EC2.

You can connect to the server through the web interface or through gsutil Tool copying the line from the web interface for connecting and running it in the SDK, which can be downloaded from here.

Example of the line for connection via SSH:

./gcloud compute --project "project_name" ssh --zone "zone"

Google services

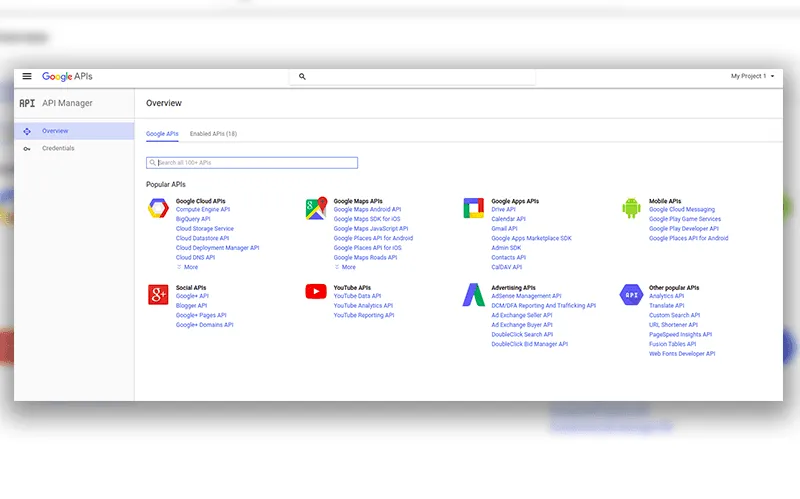

Google has a lot of useful services to simplify the development and optimization of the project. I will describe two of them which are often used by developers including me. There are a number of libraries to interact with Google services. We've used the PHP library. You can test the API with different services here.

Google Cloud SQL

Google Cloud SQL is a service for managing MySQL databases. This service can be used for different programming languages:

- Java;

- Python;

- PHP;

- Go.

The 5.5 and 5.6 versions of MySQL are fully supported. First Generation instances can have up to 16GB of RAM and 500GB data storage. Second Generation instances can have up to 104GB of RAM and 10TB data storage. It is possible to import and export a database to the cloud.

We have used three cloud instances. The first one is for logging, the second one is for storing basic data as the master, and the third one is for a slave. As you can see we have used the database replication for unloading the server with the database.

There was a need to export a database from the instance where the logs are stored and record to Google Bucket (it can be created with Google Storage service).

Select the configuration data for initialization:

$auditInstance = Yii::$app->params['auditInstance'];

$project = $auditInstance['projectId'];

$client = new \Google_Client();

Since we make a request from the instance with the project to the other instance, we need to use a generated JSON file with the accesses.

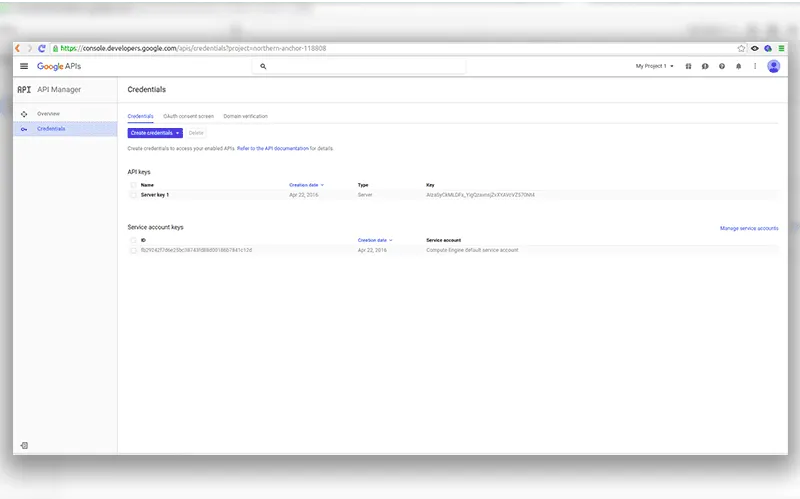

To generate a JSON file you need to open Google Console of the instance and click 'Credentials'.

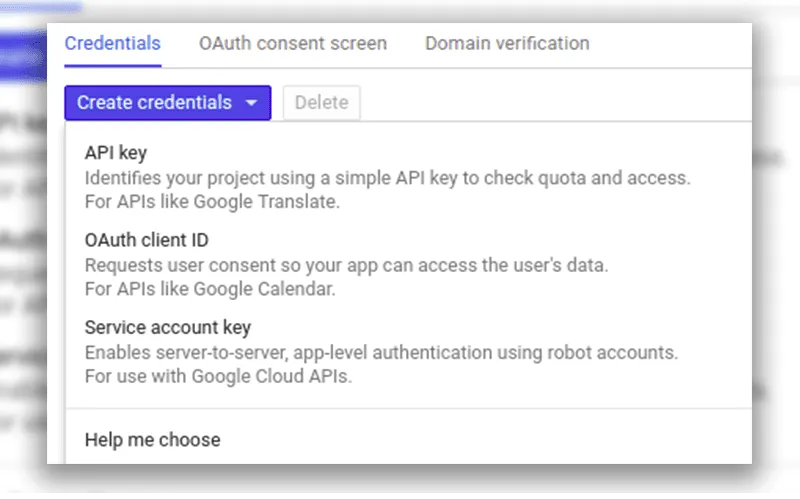

Then click 'Create credentials', and choose 'service account key'.

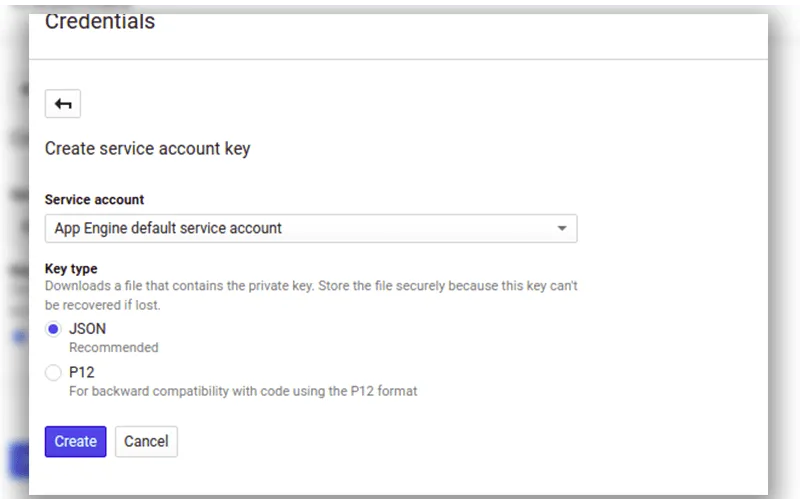

Choose 'App Engine default service account'

Click 'Create'

You'll be offered downloading JSON file with the accesses, then download it and add to the project.

It is also necessary to include services which are used, so open the 'Overview', click on the necessary service then click 'Enable'.

Now the service is available and you can refer to it.

Set permissions for API:

$client->setAuthConfig(path_to_file/file.json);

You should set the following Scopes to use the SQL and storage services:

$client->setScopes(['', ');

$client->addScope(\Google_Service_SQLAdmin::CLOUD_PLATFORM);

$client->addScope(\Google_Service_Storage::DEVSTORAGE_FULL_CONTROL);

You can read about them here.

Initialize classes and properties for working with database of cloud SQL:

$sqlAdmin = new \Google_Service_SQLAdmin($client);

$exportOptions = new \Google_Service_SQLAdmin_ExportContextSqlExportOptions();

$exportOptions->setTables(array());

$context = new \Google_Service_SQLAdmin_ExportContext();

$context->setDatabases(array('name_of_database'));

Set Uri, where our dump will be uploaded to, $bucket - a bucket name, $object - the path where to record:

$context->setUri('gs://' . $bucket . '/' . $object);

$context->setSqlExportOptions($exportOptions);

$postBody = new \Google_Service_SQLAdmin_InstancesExportRequest();

$postBody->setExportContext($context);

Then you have to export the database to the bucket:

$res = $sqlAdmin->instances->export($project, $instance, $postBody);

Google Cloud Storage

It is a cloud service for data storage. Google Cloud Storage allows you to store data with high reliability. Google Cloud Storage provides three types of data storage:

- Standard Storage

- Durable Reduced Availability (DRA)

- Cloud Storage Nearline

When choosing you should take into account which type of the data you need it for. If you need frequent access to data, and the response time should be short, I advise you to choose the Standard Storage. After creation of a repository, you have to create a bucket. It is a container for data storage. The name of it must be unique. If someone has already created a bucket with the same name, the system won't allow you to set that name. You can create folders and store files inside a bucket.

You can download the data in the following ways:

- using Cloud Platform Console;

- using gsutil Tool;

- and, of course, through the API.

You can get the access to the API using a library for a particular language. More information can be found here.

Also, you can migrate data from Amazon S3 to Google Storage.

We have used Google Storage for storing logs for each day since it is irrational to store the data for the whole period of time in the MySQL database. Furthermore, it will be more expensive.

Here are a few examples of the work with the storage.

In each of these methods below useApplicationDefaultCredentials method is used. It is intended to authorize the service. Since script executes in instance, we can set default permissions, without generated JSON file.

The insert method is intended to upload files to the storage.

public static function insert($bucket, $pathFrom, $pathTo)

{

$client = new \Google_Client();

$client->useApplicationDefaultCredentials();

//add scope for Managing your data and permissions in Google Cloud Storage.

$client->addScope(\Google_Service_Storage::DEVSTORAGE_FULL_CONTROL);

//create object for work with storage

$storage = new \Google_Service_Storage($client);

$obj = new \Google_Service_Storage_StorageObject();

//$pathTo file name (path)

$obj->setName($pathTo);

//upload file to storage

$resIns = $storage->objects->insert(

$bucket,

$obj,

[

'name' => $pathTo,

'data' => file_get_contents($pathFrom),

'uploadType' => 'multipart',

'mimeType' => mime_content_type($pathFrom)

]

);

//check result of inserting

$res = is_a($resIns, 'Google_Service_Storage_StorageObject');

if (!$res)

return false;

$acl = new \Google_Service_Storage_ObjectAccessControl();

$acl->setEntity('allUsers');

$acl->setRole('READER');

$acl->setBucket($bucket);

$acl->setObject($pathTo);

//add permissions for uploaded file

$resAcl = $storage->objectAccessControls->insert($bucket, $pathTo, $acl);

//check adding permissions

$res = is_a($resAcl, 'Google_Service_Storage_ObjectAccessControl');

if (!$res)

return false;

return true;

}The get method is intended to download files from the storage.

public static function get($bucket, $pathFrom, $pathTo)

{

$client = new \Google_Client();

$client->useApplicationDefaultCredentials();

//add scope for Managing your data and permissions in Google Cloud Storage.

$client->addScope(\Google_Service_Storage::DEVSTORAGE_FULL_CONTROL);

//create object for work with storage

$storage = new \Google_Service_Storage($client);

try {

//get file data from storage

$res = $storage->objects->get($bucket, $pathFrom);

$filePath = $pathTo . '/' . $res->name;

//download file to path, which location in $filePath

file_put_contents($filePath, fopen($res->mediaLink, 'r'));

return $filePath;

} catch (\Google_Service_Exception $e) {

return false;

}

}The delete method is intended to delete data from the storage:

public static function delete($bucket, $path)

{

$client = new \Google_Client();

$client->useApplicationDefaultCredentials();

//add scope for Managing your data and permissions in Google Cloud Storage.

$client->addScope(\Google_Service_Storage::DEVSTORAGE_FULL_CONTROL);

$storage = new \Google_Service_Storage($client);

//remove file from storage

$res = $storage->objects->delete($bucket, $path);

return $res;

}The article was intended to show how you can use Google services, and how they simplify a developer's life. When developers face with Google Compute and Google services for the first time, they can be lost in the documentation and search for a solution to the various problems for a very long time.

So, I have tried to describe some of the cases, which are often used. As we've made sure, you can optimize the data storage by rendering the data into separate buckets. Also, it is possible to optimize the load division due to the placing databases in the Google Cloud SQL, which is very important.

If you still have some questions, feel free to contact us and we'll find all the answer for you. Subscribe to our new posts to get all the fresh articles. Also, share with us your suggestions and opinion.

Evgeniy Altynpara is a CTO and member of the Forbes Councils’ community of tech professionals. He is an expert in software development and technological entrepreneurship and has 10+years of experience in digital transformation consulting in Healthcare, FinTech, Supply Chain and Logistics

Give us your impressions about this article

Give us your impressions about this article