ML Kit for Firebase: an Easy-to-use Tool for Machine Learning

Updated 07 Jul 2023

9 Min

3065 Views

Google I/O conference is a long-awaited event for developers. This is not surprising -- every year Google manages to impress software engineers with outstanding innovations. And 2018 wasn't an exception. Android P, Material Design 2.0, augmented reality in Google Maps, and many other new products were introduced by Google's team.

A growing interest to machine learning forced the company to focus on it. So the introduction of new mobile SDK called Firebase ML Kit now is a hot topic in the IT industry.

Let's find out whether this new product worth using an how it can change the world of mobile development.

Key Features

ML Kit SDK is a brand new product, so first let's discover what we know for now. It is a software development kit which makes it quite easy to integrate ML models into mobile apps. And no matter, whether you are a newbie or an experienced programmer.

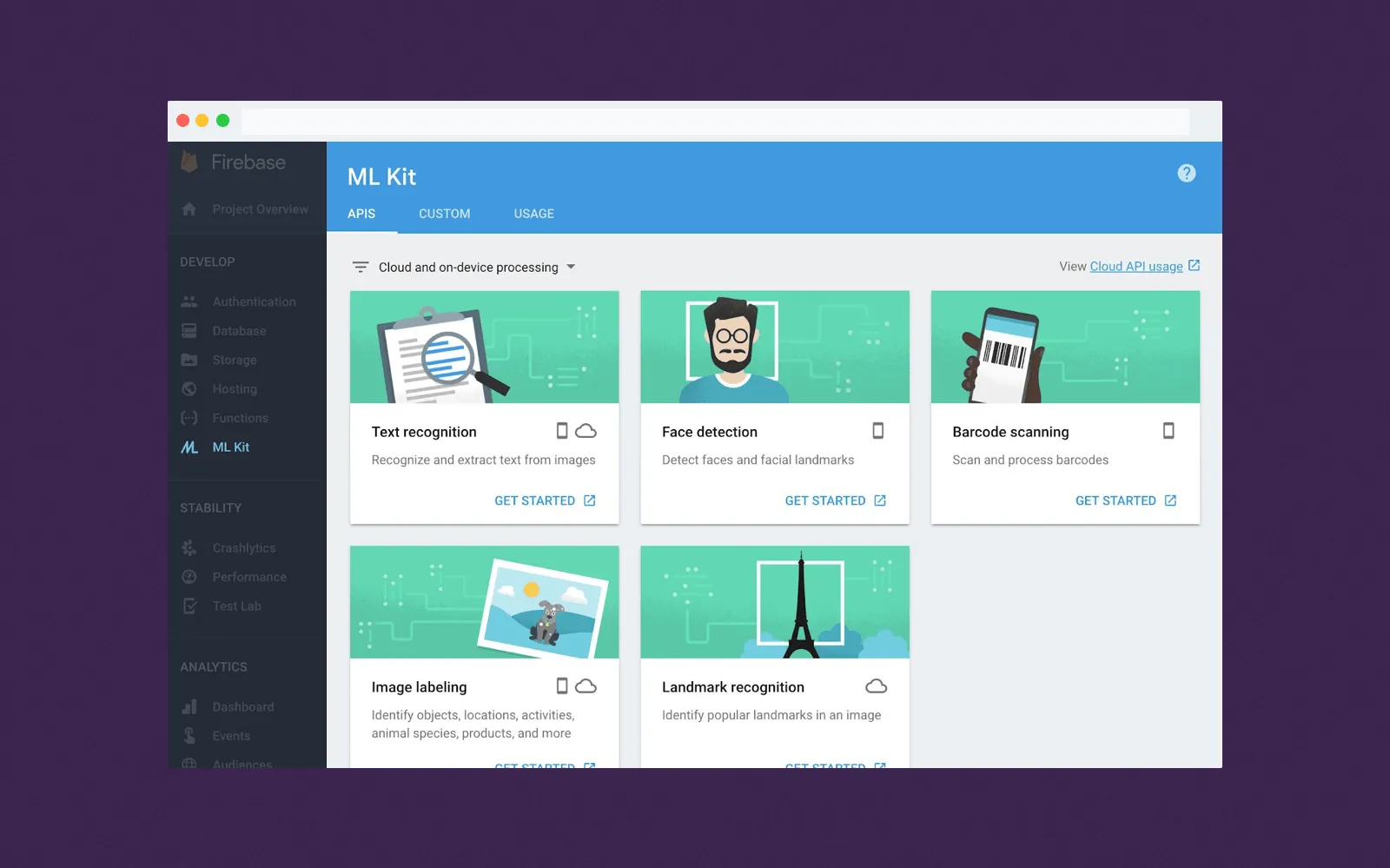

Now we have a beta release available through Firebase platform. List of basic features includes five ready-made APIs. They provide applications with an ability to:

- recognize text;

- scan barcode;

- recognize landmarks;

- detect faces;

- label images.

Before we dive into exploring detailed information about each API, let's discover main peculiarities of ML Kit.

ML Kit Console

Easy for newbies

Typically, adding AI to an app is a challenging and time-consuming process. At first, you need to learn an ML library. For instance, Torch, Azure ML Studio, TensorFlow or PyBrain. Next, you should train your neural network to accomplish a particular task. Think you're at the finish line after this? Wait, it's a half of the battle. Then it's important to create an ML model that won't be too huge for running on mobile. Congratulate, you've done it! Frankly speaking, it can be challenging even for experts in machine learning.

With new SDK this process is dramatically simplified. All you need is to pass data to the API and wait till SDK will send a response. Google's team stated, that implementing their APIs don't require deep knowledge of neural networks. Just add a few lines of code and enjoy new features in your app!

Custom models

This option is useful for experienced developers. If 'base' ML Kit APIs don't cover all your needs, you can introduce your own ML model. Firebase product manager Sachin Kotwani explained how developers can benefit from adding own models to ML Kit.

Discover our top 12 AI libraries and frameworks that can make your software smarter.

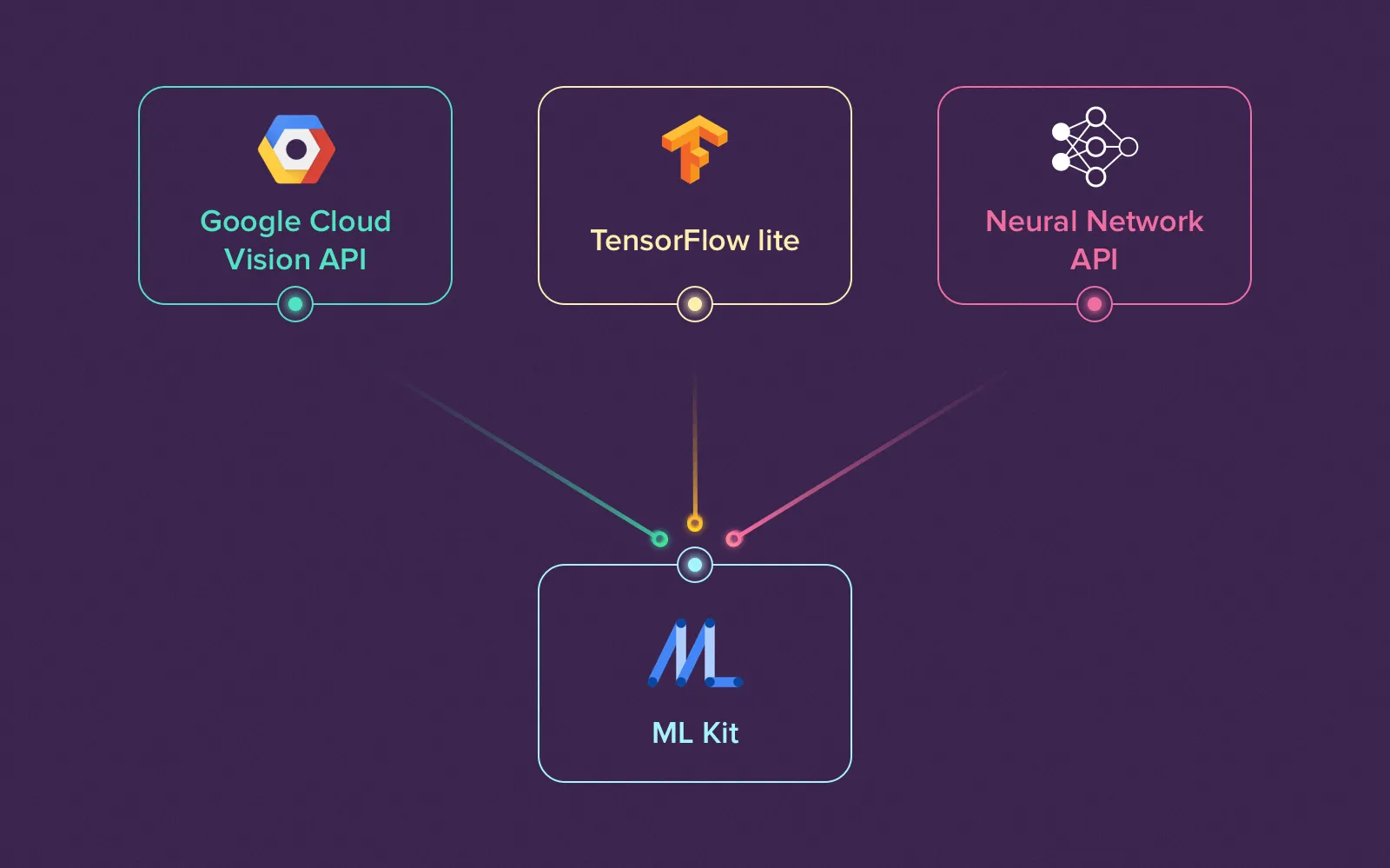

New SDK works with Tensor Flow, iOS and Android machine learning library. It provides mobile developers with the possibility to download their own model to Firebase console and bundle it with their product. In case the created model is too big, it is possible to leave it in the cloud, and then download it to app dynamically. Such an option reduces app's initial size, so users need less time to download it. Another important point is that models are also updated dynamically. It implies that model will be updated even without updating the whole app.

Cloud and on-device APIs

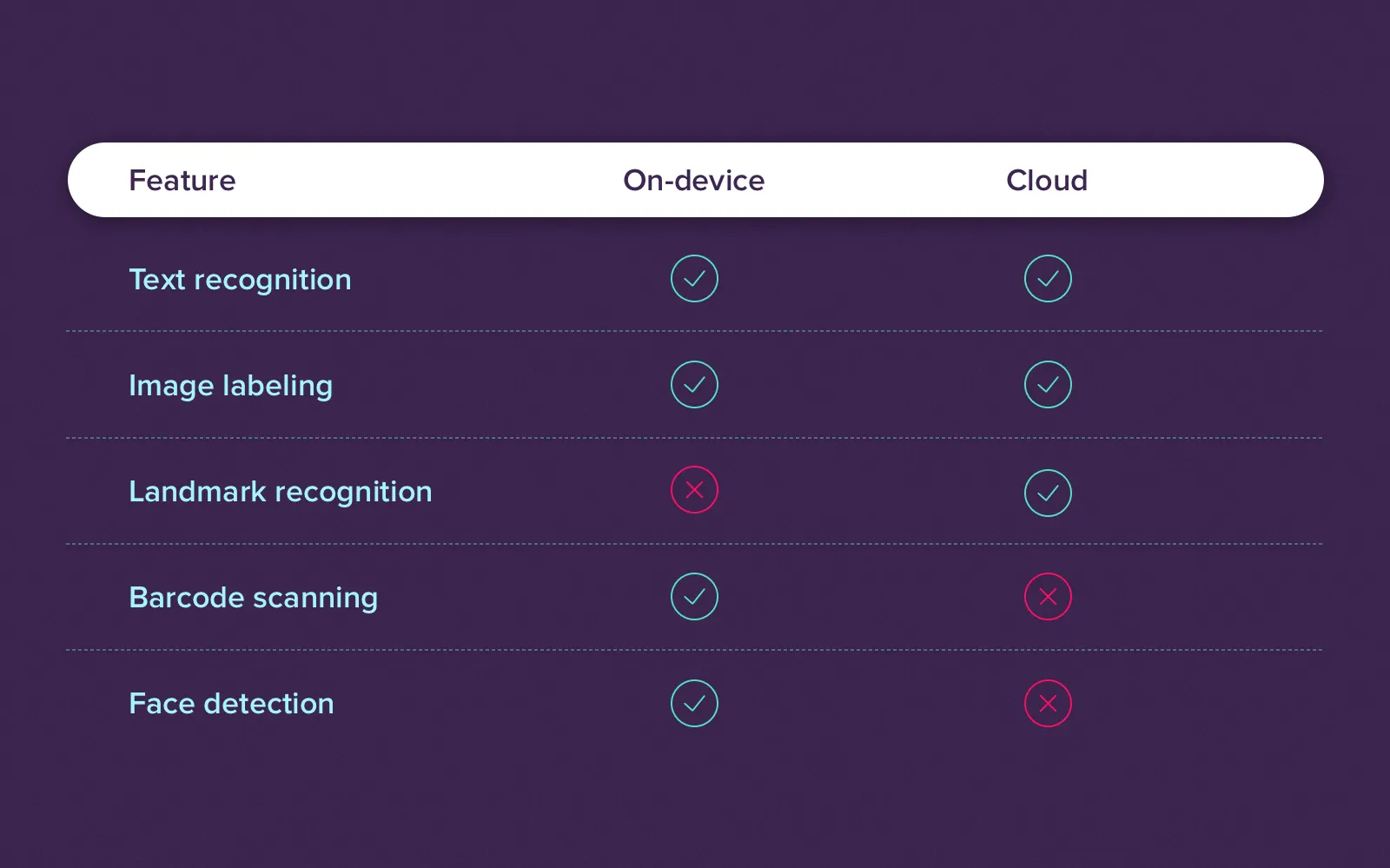

Programmers can choose between cloud-based and on-device APIs. To make a right choice it's important to take into consideration some differences between these two variants. Cloud APIs process data on the Google Cloud Platform, so recognizes objects more accurately. But cloud models are larger in comparison to on-device ones. Offline models need less free space, can work offline and process data faster, but their accuracy is lower.

Moreover, some features available only in the cloud or offline. Image labeling, text and landmark recognition features work in the cloud. A wider range of features is available offline. On-device APIs scan barcode, recognize texts, label images, and detect faces.

Another difference is pricing. Local APIs are free of charge. ML Kit for Firebase uses Cloud Vision API, so the cloud APIs and Cloud Vision API have the same pricing. Costs for it depends on how many times you use these APIs. The 1000 uses for one month are free, then each thousand of uses will cost you $1.50.

The last but not least point is privacy. Google claims, that cloud APIs don't store user's data. It is deleted when processing is done.

Cross-platform

Google claims that their new SDK is cross-platform, so developers can add APIs to both iOS and Android applications. It means that a robust competitor of Apple's Core ML has arrived. But CoreML still has advantages over ML Kit. In addition to using TensorFlow, Core ML also accepts ONYX, bespoke Python tools, and Apache MXNet.

Android ML Kit apps can run even in the old versions of Android, beginning with Ice Cream Sandwich. For devices with Android 8.1 Oreo and later, Google offers better performance. Standard Neural Network API for Android is used For cloud models.

ML Kit for Firebase combines three popular technologies

Interaction with other Firebase services

ML Kit by Google can be easily coupled with other Firebase services. For instance, you can keep image labels in Cloud Firestore. Or measure user engagement with the help of Google Analytics. The integration of Remote Config and ML Kit simplifies A/B testing on custom ML models.

Useful and clear examples

On the Firebase website, programmers can find step-by-step guides on how to implement basic use cases to their applications. Guides are provided both for iOS and Android developers, so mobile developers can implement any of pre-built APIs to their applications and test new features in less than an hour.

Advanced Capabilities

At the beginning of the article we have already mentioned the basic functionality, now let's deepen our knowledge.

ML Kit Text recognition

This is one of two functions available both in the cloud and offline. The on-device version can recognize text only in Latin-based language. The number of languages identified by cloud API is wider, it also deals with identifying special characters. It recognizes text not only on documents but also on real-world objects. For instance, it can read car numbers or inscriptions on plaques. So this feature will be perfect for videos or photos.

ML Kit Image labeling

This function is also can work both offline and online. Once users download a photo, an app shows them a list of objects that application has recognized. In addition to the label, ML Kit shows the level of confidence represented by a decimal number. It will help to moderate content of an app and automatically generate metadata.

ML Kit Landmark recognition

This function is available only in the cloud. When user uploads an image to the landmark recognition API, an application returns the region where this landmark is situated and geographical coordinates of this landmark.

As far as this is cloud-based API, you have limitations on free uses. For one month you can use this API 1000 times, then you should pay.

ML Kit Face detection

This feature can be especially useful for those developers who want to create an app like Snapchat. When API gets an image, it returns coordinates of nose, mouth, eyes, cheeks, and ears. This API also provides the information whether a person on the picture has eyes closed or smiles. But take into consideration that this determination works only for frontal faces. In case there's more than one face on the camera, API gives each person a unique ID.

Another great feature is the speed of face detection. Since API performs tasks very quickly, it can be used in real-time apps.

ML Kit Barcode scanning

This API makes it extremely easy to scan barcodes. It reads most linear and 2D formats of barcode including Codabar, ITF, Data Matrix, QR and Aztec Code. Users have no need to specify the format of the barcode. But if you want to boost the scanning speed, you can restrict detector to particular formats.

ML Kit key capabilities

Weak sides of ML Kit

Because of this is a new product, ML Kit has a couple of shortcomings:

Huge size

Custom models can be a great opportunity for experts in machine learning to easily implement their own use cases to the application. But it seems, that all these additional ML models will impact heavily on your app size. A big size of some ML models can result in a big size of apps. So, both users and developers will suffer.

At the beginning of 2018, Google released a beta version of Flutter UI framework. See how you can profit from apps built with Flutter

Beta version

Google Machine Learning Kit was introduced recently, so it is still under development. First, it greatly affects a cloud-based API. They are still in preview. In case you want to add cloud-based APIs, you should directly use the Cloud Vision API. Second, small defects and technical issues is a common thing for any beta version. At the moment, the release date of full-fledged version is unknown.

Use cases

Even though ML Kit for Android and iOS was presented not long ago, companies have already tried it out for their products. VSCO, EyeEm, PicsArt, Fishbrain, and Turbotax have already joined the list of this SDK's users. Let's discover how they use ML Kit APIs.

Lose It!

Lose It! by FitNow is a calorie tracking app helping people lose weight. FitNow was one of ML Kit's first users, so at the conference Sachin Kotwani demonstrated how the app works. The new function allows customers to add information about consumed products without having to type it manually. They just bring the camera close to the product label and application read and analyze this data. The company used base API that recognizes text and added it's own custom model.

Lose It! app with text recognition API

Turbotax

Turbotax is an application that simplifies the process of paying taxes. Turbotax developers implemented a barcode scanning API to the app. When a customer scans barcode on it's driver' license, the program prepopulates his or her information. This step decreases the risk of making mistake and reduces manual data entry.

The ML Kit is in early development. Google suggests developers signing up for upcoming ML Kit Firebase features, so they can check and try new updates.

What to expect next

Debuted on May 9th, ML Kit has all chances to become a multifunctional machine learning tool. Google has already announced what will be added soon.

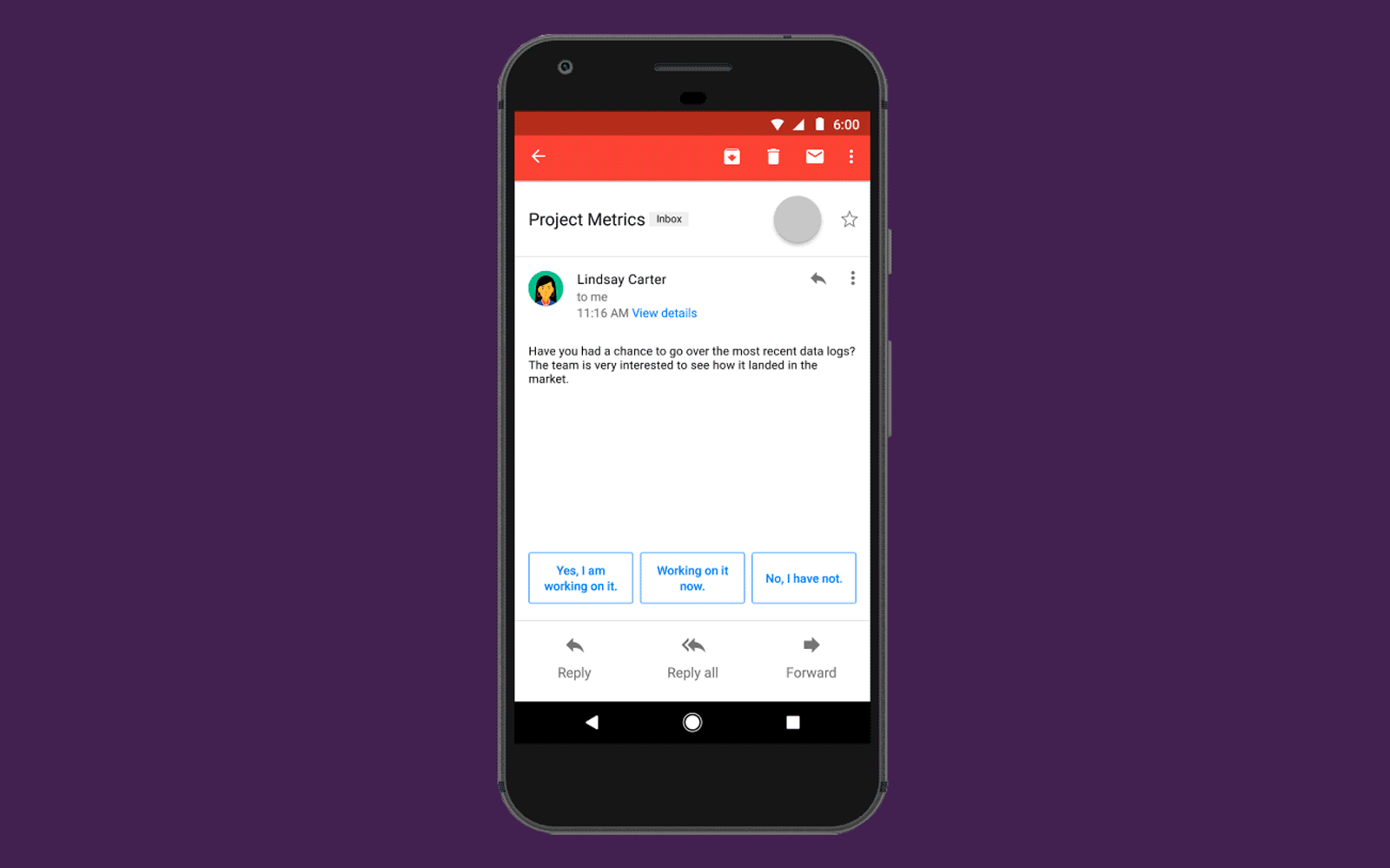

In June Google will release another base API called Smart Reply. The new API will be added to Gmail. Let's find out how it works. When a user receives a letter, Smart Reply scans it and creates short replies. Then all possible variants are shown to Google Inbox users, so they can choose one and sent it at once.

This is how Smart Reply works

We can expect upgrades for already existing APIs. For instance, detecting face with higher accuracy. As was told on Google I/O, new high-density contour detects over hundred points on user's face and processes them in 60 frames per second. This function will be an ideal solution for mobile software that adds masks or other items to user's face.

The next expected improvement is a model compressions. As we told earlier, models can be really huge. To avoid this, the company suggests using the following strategy. You download pre-built model to TensorFlow, then it will be converted into TensorFlow Lite model without losing accuracy. But this function is still under development, so unavailable for now.

If you have an interesting idea of building ML models, drop us a line and we will help you to transform your idea into reality. And click the subscribe button in order to be always up to date with new trends in software development.

Evgeniy Altynpara is a CTO and member of the Forbes Councils’ community of tech professionals. He is an expert in software development and technological entrepreneurship and has 10+years of experience in digital transformation consulting in Healthcare, FinTech, Supply Chain and Logistics

Give us your impressions about this article

Give us your impressions about this article